Skift Take

We haven’t been able to test Meta’s live translation so we can’t say how seamless it will be. But development of these products is coming fast.

The Ray-Ban Meta AI-powered glasses are getting a feature for live voice translation.

Mark Zuckerberg, CEO and founder of Meta, demonstrated the new feature and a slew of other updates during the keynote speech of the Meta Connect developer conference, streaming from the companyâs main campus in California.

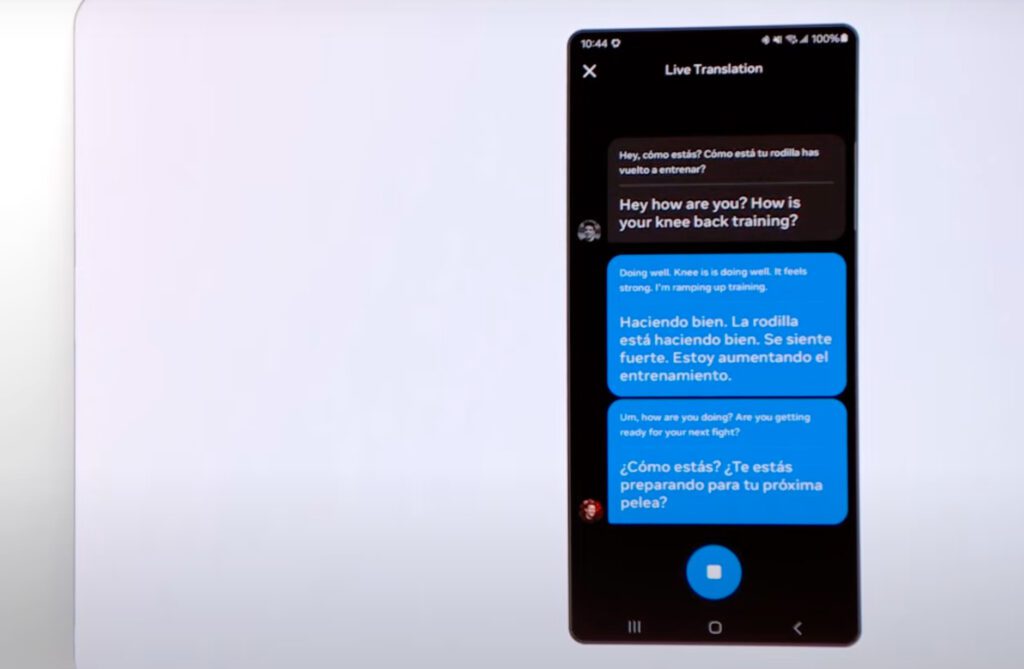

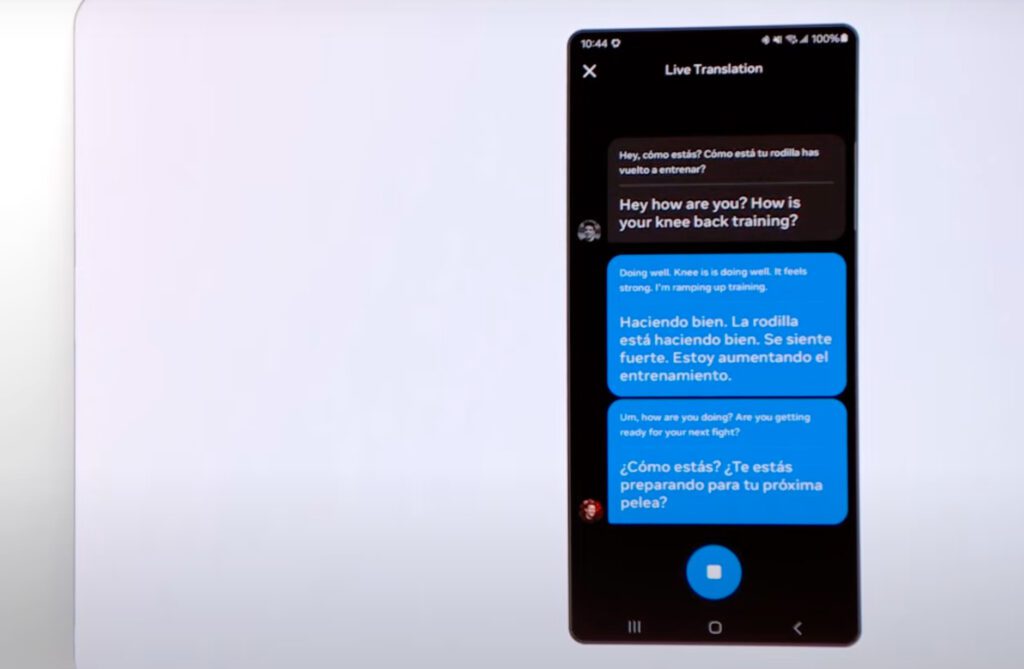

When talking to someone who speaks Spanish, French, or Italian, the user wearing Ray-Bans should be able to hear a real-time English translation through a speaker in the glasses, he said. The user then replies in English, and a mic in the glasses picks up the voice and transfers it to the user’s connected mobile app. The translation from English to the other language comes through the app, which the user then shows to the other person.

The company did not say when the update is coming but said it would be soon. The company plans to add more languages in the future.

Meta earlier this year shared that it was integrating voice-activated AI into the glasses, which meant it could translate menus and answer questions about landmarks seen through the lenses.

The Ray-Bans are available for as low as $299.

Live Demo Between Spanish and English

Zuckerberg demonstrated the new translation feature with Brandon Moreno, former two-time UFC Flyweight Champion. Moreno spoke in Spanish to Zuckerberg, and Zuckerberg responded in English to Moreno.

The demo was brief, but the chatbot was able to translate in real time despite some slang and pauses.

OpenAI earlier this year demonstrated an upgrade for live voice translation through its app, which would understand non-verbal cues like exhalation and tone of voice. The feature has not become widely available yet.

Upgraded AI Model

The new features for the glasses are powered by Metaâs latest AI model, Llama 3.2, revealed during the presentation on Wednesday.

The Meta AI chatbot â accessible through WhatsApp, Instagram, Messenger, and Facebook â is getting an upgrade with the new model. It includes âmulti-modalâ capabilities, which means users can interact with the chatbot using text, photos, and speech.

Another new AI translation feature: Automatic video dubbing for Reels, starting with English and Spanish, with automatic lip syncing so it appears as if the subject is speaking the language.